Gain new perspectives for faster progress directly to your inbox.

Why bigger isn’t always better with AI tools and specialized applications

Since its release in 2022, ChatGPT has redefined the conversation around AI. It’s been hailed as both a miracle and a menace—from positive promises like revolutionizing work and revitalizing cities to negative impacts such as replacing humans in their jobs, this large language model (LLM) is said to be capable of nearly anything and everything.

What’s the difference between ChatGPT and GPT-3/GPT-4?

There are important differences between ChatGPT and the LLMs themselves, named GPT- with a number (i.e., GPT-3 or GPT-4). These are often confused or used interchangeably, but ChatGPT is the chatbot app with the “easy-to-use” interface that runs on top of the more complex LLMs (GPT-3 or GPT-4).

GPT-3 and GPT-4 are different versions in a series of Generative Pre-Trained Transformer models. Transformers are a type of neural network known as language models. These models can learn to recognize patterns and contexts in loosely structured data like words in sentences. Transformers are especially good at that. A generative model, when given a context, can generate an arbitrarily long output from a prompt. GPT models combine these two types of models.

On the other hand, ChatGPT is the app on top of LLMs like GPT-3 or GPT-4. It has a memory module to continue prior conversations, and it has filters, classifiers, and more built-in to minimize toxic or inappropriate answers.

What does it take to build an LLM?

LLMs are impressive. GPT-3 has 175 billion parameters, or values that the model can change independently as it learns. GPT-4, the latest version of the GPT series released in 2023, is even bigger at 1 trillion parameters. As anyone who has used them can attest, these models have incredibly broad knowledge and startlingly good abilities to produce coherent information.

However, these capabilities come, quite literally, with costs. Training these ever-bigger GPT models and deploying apps like ChatGPT is a tremendous engineering feat. It’s estimated that GPT-3 cost $4.6 million to build and at least $87,000 a year to operate in the cloud. GPT-4 likely cost an eye-watering $100 million or more to develop.

On top of these figures are the significant costs of the hardware and the resources to keep it operating while ensuring that its data centers are kept cool. Data centers can use billions of gallons of water, and air conditioning draws energy and contributes to emissions, all of which forces organizations to evaluate the costs and benefits of these powerful tools. Because of these up-front and ongoing costs, LLMs are already prohibitively expensive for most private companies, academia, and public sector organizations, and this will continue as the models grow larger and more powerful.

The limitations of LLMs

Thanks to specific structural components, transformer models capture the relationship between different inputs, and thanks to a vast amount of sample texts, modern LLMs have become very good at extracting the broad semantics of a piece of text and keeping track of the relationships between textual elements. Generative models like GPT-3 have gone one step further and learned to keep track of these relationships across both question and answer. The results are often compelling. We can ask ChatGPT for a number between 0 and 100, or whether cholesterol is a steroid, and will likely get the correct answer.

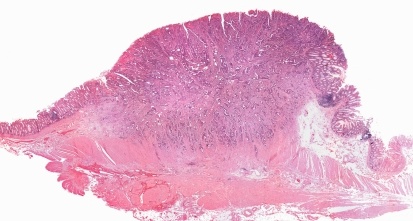

For specialized applications like scientific research, however, large language models can struggle to go beyond broad semantics and understand nuanced, specific information. Why is this the case? First, LLMs are not immune to the “garbage-in/garbage-out” problem. Second, even with high-quality training data, the relevant training information may be underrepresented.

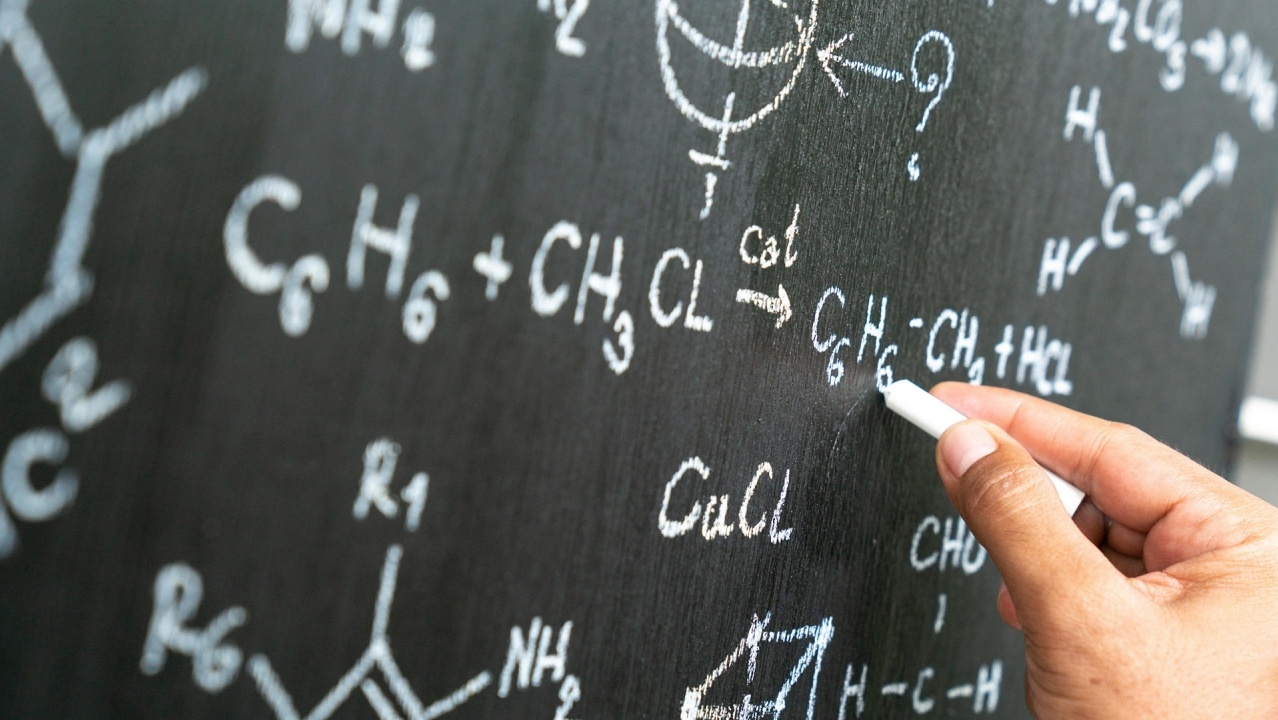

Broad, often-described topics are well captured by the LLMs, but narrow, specialized topics are almost always underrepresented and are bound to be less well-handled. For example, a large language model can get to the right abstraction level and determine if something is a steroid molecule. It could even recognize two steroid molecules in the same family, but it couldn’t consistently recognize if one is extremely toxic and the other is not. The ability of the large language model to make that distinction depends on the data used for training and whether the correct information was recognized and “memorized”. If it was hidden in a sea of bad or conflicting information, then there is no way for the model to come up with the correct answer.

Some might counter that more data, cleaner data, and bigger models would fix that problem. They might be right, but what if we ask a generative LLM for a random number between 0 and 100? Can we be sure that the number given is actually random? To answer that question, we need to go beyond lexical semantics and memorized facts, beyond LLMs, and toward AI agents. Here, the agent would construct an executable piece of code using validated procedures, pass it on to another process to run it, process the result, and present the answer back to the user.

Specific challenges with scientific data

As my colleagues at CAS know, scientific data can be much more complex than text, and most problems can’t be expressed in one or two questions.

When using AI-driven tools for scientific research, we must ask ourselves: what problem are we trying to solve? Many problems involve language or loosely structured sequences, so language models are a perfect fit. What about tabular data, categorical data, knowledge graphs, and time series? These forms of data are necessary for scientific research, yet LLMs can’t always leverage them. That means that LLMs alone cannot provide the level of specificity needed for an application like molecular research. Instead, similar to how an orchestra needs numerous instruments to make a cohesive sound, science needs multiple tools in its AI toolbox to produce coherent results.

A systems approach for depth as well as breadth

If LLMs alone aren’t right for scientific research, then what is? The answer is a systems approach that uses multiple types of models to generate specialized outputs. By layering language models and neural networks with traditional machine learning tools, knowledge graphs, cheminformatics, and bioinformatics, as well as statistical methods such as TF-IDF, researchers can include deep, nuanced information in their AI-driven programs.

These tools can provide the specific results needed for tasks such as developing new drug molecules or inventing a new compound. Knowledge graphs are particularly valuable because these act as a ground truth—reliably connecting known entities: a molecule, reaction, published article, controlled concept, etc. An ideal use case is leveraging a deep neural network that can say, “This is a certain type of substance,” along with a knowledge graph that will validate accuracy. This is how we get to the reliable facts needed in scientific research.

This type of systems approach is essentially a fact-checking or verification function for improved data reliability, and it’s proving itself effective in specialized applications. For example, Nvidia recently released Prismer, a vision-language model designed to answer questions about or provide captions for images. This model uses a “mixture of experts” approach which trains multiple smaller sub-models. The depth of knowledge with this model has provided quality results without massive training—it matched the performance of models trained on 10 to 20 times as much data.

Google is also working on a similar approach, where knowledge is extracted from a general-purpose “teacher” language model into smaller “student” models. The student models present better information than the larger model due to their deeper knowledge—one student model trained on 770m parameters outperformed its 540-bn-parameter teacher on a specialized reasoning task. While the smaller model takes longer to train, the ongoing efficiency gains are valuable as it is both cheaper and faster to run.

Improving scientific research

Another example of a successful systems approach is PaSE, or the Patent Similarity Engine, developed by my colleagues and me at CAS, which powers unique features in CAS STNext and CAS SciFinder. We built this model as part of our collaboration with Brazil’s National Institute of Industrial Property (INPI), which is their national patent office. It was designed to process huge amounts of information in just minutes so that researchers could tackle persistent patent backlogs.

The solution includes a language model that uses the same critical machine-learning techniques as the GPT family, but it layers on additional types of learning including knowledge graphs, cheminformatics, and traditional information-retrieval statistical methods. By training the model on the world’s science, such as the full-text patents and journals inside of the CAS Content CollectionTM, PaSE achieved the depth and breadth needed to find “prior art” 50% faster than manual searching.

For patent offices, proving that something doesn’t exist is extremely challenging. Think of the adage, “Absence of evidence is not evidence of absence.” Our data scientists trained and optimized the model in conjunction with expert patent searchers, the Brazilian INPI team, and a unique combination of AI tools that identified prior art with 40% less manual searching. Due to this performance and the reduction in patent backlogs, it was awarded the Stu Kaback Business Impact Award by the Patent Information Users Group in 2021.

The path ahead for large language models in science

This experience shows that LLMs will continue to have a significant role in scientific research going forward, but contrary to popular belief, this one tool is not a panacea for every problem or question.

I like to think of these models in terms of organizing a messy room in your house, say, a closet or an attic. Different people may organize items in those rooms in different ways. One person may organize everything by color, while someone else puts all the valuables together, and another person organizes them by function. None of these approaches are wrong, but they may not be the type of organization you want or need. This is essentially the issue with LLMs that may organize information in a certain way, but it’s not the way a scientist or researcher needs.

For specialized applications where a user requires a certain outcome, such as protein sequences, patent info, or chemical structures, AI-powered models must be trained to organize and process information in specific ways. They need to organize the data, outcomes, and variables in the way their users want for optimal training and predictions.

To learn more about the impact of that data, their representation, and the models that are improving predictions in science, check out our case studies with CAS Custom Services. Want to learn more about the emerging landscape of AI and chemistry? Read our latest white paper on the opportunities AI holds for chemistry or explore our resources on how AI can improve productivity in patent offices worldwide.